Metrics install automatically if you have Redgate Monitor installed.

If you are using Redgate’s SQL Server monitoring tool, Redgate Monitor, you can instantly install and run this metric on your servers.

Excessive counts of Virtual Log Files (VLF) in a transaction log can lead to performance problems with the transaction log, including restore and recovery times. Excessive VLFs can occur when a transaction log is resized many times, through autogrowth for example.

The ideal value or threshold for this metric is environment dependent; excessively high values can cause high write times when writing to the transaction log.

If this metric indicates an increased number of VLFs in the transaction log, the transaction log should be rebuilt using the steps outlined in Kimberly Tripp’s blog post, 8 Steps to better Transaction Log throughput. Also see Transaction Log Physical Architecture.

Note: This metric relies on the use of an undocumented DBCC command from Microsoft. The output of this command may change (as it did from SQL Server 2008 to SQL Server 2012), so this script may not work correctly on future versions of SQL Server.

Note: This metric works on SQL Server 2000 SP4 and later versions.

Metric definition

Name

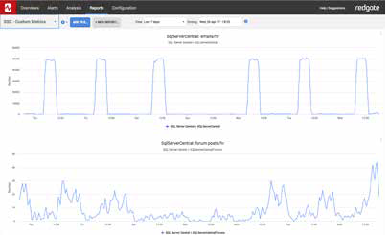

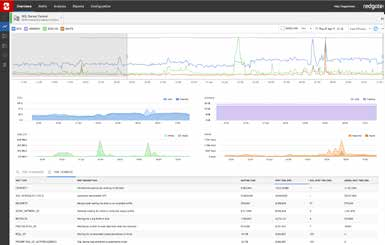

Transaction log fragmentation

Description

Explanation: This custom metric measures the number of VLFs in the transaction log. A high number of VLFs can affect write performance and impact restore and recovery times.

Guideline values: You can use the 50 VLF rule for a log file < 25GB. Anything larger than 25GB, a healthy log, in terms of VLF number, should be determined by log size / 512MB.

Possible solutions: If this metric indicates an increased number of VLFs in the transaction log, the transaction log should be rebuilt using the steps outlined in Kimberly Tripp’s blog post, 8 Steps to better Transaction Log throughput. Also see Transaction Log Physical Architecture.

The T-SQL query that will collect data

Instances to collect from

Select all

Databases to collect from

0

Collection frequency

86400

Use collected or calculated values

Leave the Use a calculated rate of change between collections check box unchecked

Metric collection

Enabled

Alert definition

Alert name

High number of VLF in the transaction log

Description

This alert is raised if excessive Virtual Log Files (VLF) counts occur. This can lead to performance problems with the transaction log, including restore and recovery times. Excessive VLFs can occur when a transaction log is resized many times, through autogrowth for example.

You can rebuild the transaction log by following the steps outlined in Kimberly Tripp’s blog post, 8 Steps to better Transaction Log throughput. Also see Transaction Log Physical Architecture.

Raise an alert when the metric value goes

Above the defined threshholds

Default threshold values

| High: | User determined threshold |

| Medium: | User determined threshold |

| Low: | Disabled |

The value of the thresholds (too high or too low) need to be specified for your particular environment, use values suited to your server performance.

Raise an alert when the threshold is passed for

1 collection

Alert is

Enabled

17,957

17,957